Solr nodes should be like cattle, not pets!

Every week, somewhere in the world, at least one DevOps engineer responsible for a non-trivially sized Solr cluster thinks like this when they deal with SolrCloud operations such as cluster restarts. The sad reality is that Solr nodes still require careful hand holding (like pets do) during cluster wide changes to ensure zero downtime and stability.

The way single replica state changes are handled in the existing SolrCloud design limits the scalability potential of SolrCloud. The design worked fine back in the day when Solr clusters had handful of nodes, say less than 10, and a handful of collections. But, with 1000+ collections and a few tens or hundred nodes today, SolrCloud has some serious operational challenges in maintaining 100% uptime.

In Apache Solr 8.8 and 8.8.1, a new solution has been released. However, before jumping on to the solution, let us look at how single replica state changes are handled in Solr today.

Current design for replica state updates

Every single replica state change starts a cycle of the following operations:

- The replica posts a message into the overseer queue

- Overseer reads the message

- Overseer updates the

state.jsonfor the collection - Overseer deletes the message from the queue

- Every node in the cluster that hosts the collection gets an event notification (via ZK watchers) about the change in

state.json. They fetch from ZK and update their view of the collection.

Challenges with current design

You’d ask, what’s the problem with how Solr handles these changes? Let us look into that and see why this could become a problem:

- The number of events fired increases linearly with the number of replicas in a collection & the total number of collections

- The size of

state.jsonincreases linearly with the number of shards and replicas in a collection - The number of Zookeeper reads and the size of data read from ZK increases quadratically with the number of nodes, collections, replicas

Since a cluster has a single Overseer that processes the messages from the queue, an increase in the number of nodes, collections and replica can lead to a slowdown in processing state update messages, ultimately leading to a failure in the cluster. In such a scenario, the recovery of such a failed cluster becomes very hard.

If we quickly look at the situation with individual replica state changes, here are two main problems that affect the overall SolrCloud operations:

-

Overseer Bottlenecks: Usually, in most production workloads, about 90-95% of the overseer messages are “state updates”. Other collection API operations (e.g. ADDREPLICA, CREATE, SPLITSHARD etc.) would get slowed down (or timeout) due to processing excessive state update messages.

-

Instability: Restarting more than a few nodes at a time can lead to a cascading instability for the entire cluster due to generation of excessive state update messages (proportional to the number of replicas hosted on a node and number of nodes restarted).

Introducing Per Replica States

Apache Solr 8.8 and 8.8.1 has a new solution developed by Noble Paul and Ishan Chattopadhyaya, with support from FullStory.

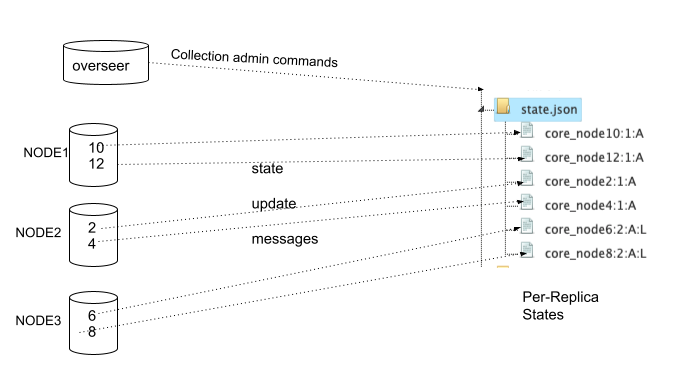

Instead of the approach where a single state.json contains structure of the collection as well as individual states, the solution follows “Per Replica State” approach as under:

- Every replica’s state is in a separate znode nested under the

state.json, with a name that encodes the replica name, state and the leadership status. - For nodes watching the states for a collection, a new “children watcher” (in addition to data watcher) is set on

state.json. - Upon a state change, a ZK multi-operation is used to (a) delete the previous znode for the replica, and (b) add a new znode with the updated state.

- This multi-operation is performed by individual nodes (that host the replica whose state is changing) directly, instead of going via overseer and overseer queue.

With this approach, on a large Solr cluster (lots of nodes, lots of collections), it is easy to see the benefits of this solution.

- Minimize data writes/reads: With per replica state approach, the data written to ZK is dramatically reduced. For a simple state update, data written to ZK is just 10 bytes, instead of 100+ KB in case of single state model where every update affects a large collection. The data read by nodes is also minimal and the deserialization costs are negligible (no JSON parsing needed).

- Reduce overhead of overseer: State updates are performed as a direct znode update from the respective nodes

- Increased concurrency while writing to states: With PRS, we can modify the states of hundreds of replicas in a collection parallelly without any contention as each replica state is a separate node. This means a rolling restart of a cluster can be safely done with more nodes restarted at once than previous approach.

- The PRS approach reduces the memory pressure on Solr (on the overseer, as well as regular nodes), ultimately enhancing Apache Solr’s scalability potential.

Design

- The state information for each replica is encoded as a child znode of the

state.jsonznode for the collection. The overall structure of the collection (names and locations of shards, replicas etc.) is still reflected instate.json. - This encoding follows the syntax:

$N:$V:$Sor$N:$V:$S:L, where$Nis the core node name of the replica (as specified instate.json),$Vis the version of the update (increases everytime this replica’s state has been updated),$Sis the state (A for active, R for recovering, D for down). If the replica is a leader, a":L"is appended. - When a replica changes state (e.g. as result of a node restarting, or intermittent failures), state update messages directly affect these children znodes of the states.

How to use this?

This feature is enabled on a per-collection basis with a special flag (perReplicaState=true/false). When a collection is created, this parameter can be passed along to enable this feauture.

http://localhost:8983/solr/admin/collections?action=CREATE&name=collection-name&numShards=1&perReplicaState=true

This attribute is a modifiable atribute. So, an existing collection can be migrated to the new format using a MODIFYCOLLECTION command

http://localhost:8983/solr/admin/collections?action=MODIFYCOLLECTION&collection=collection-name&perReplicaState=true

Similarly, it can be switched back to the old format by flipping the flag

http://localhost:8983/solr/admin/collections?action=MODIFYCOLLECTION&collection=collection-name&perReplicaState=false

Conclusion

In a subsequent post, we shall present benchmarks of this new solution compared to the baselines. Some of those have been discussed in https://issues.apache.org/jira/browse/SOLR-15052. As with all new features, please give this a try in a non-production environment first, and report bugs (if any) to Apache Solr JIRA.